Halfway between synthetic robotic environments, agent simulations and reality we have computer games: a good testbed scenario for artificial intelligences.

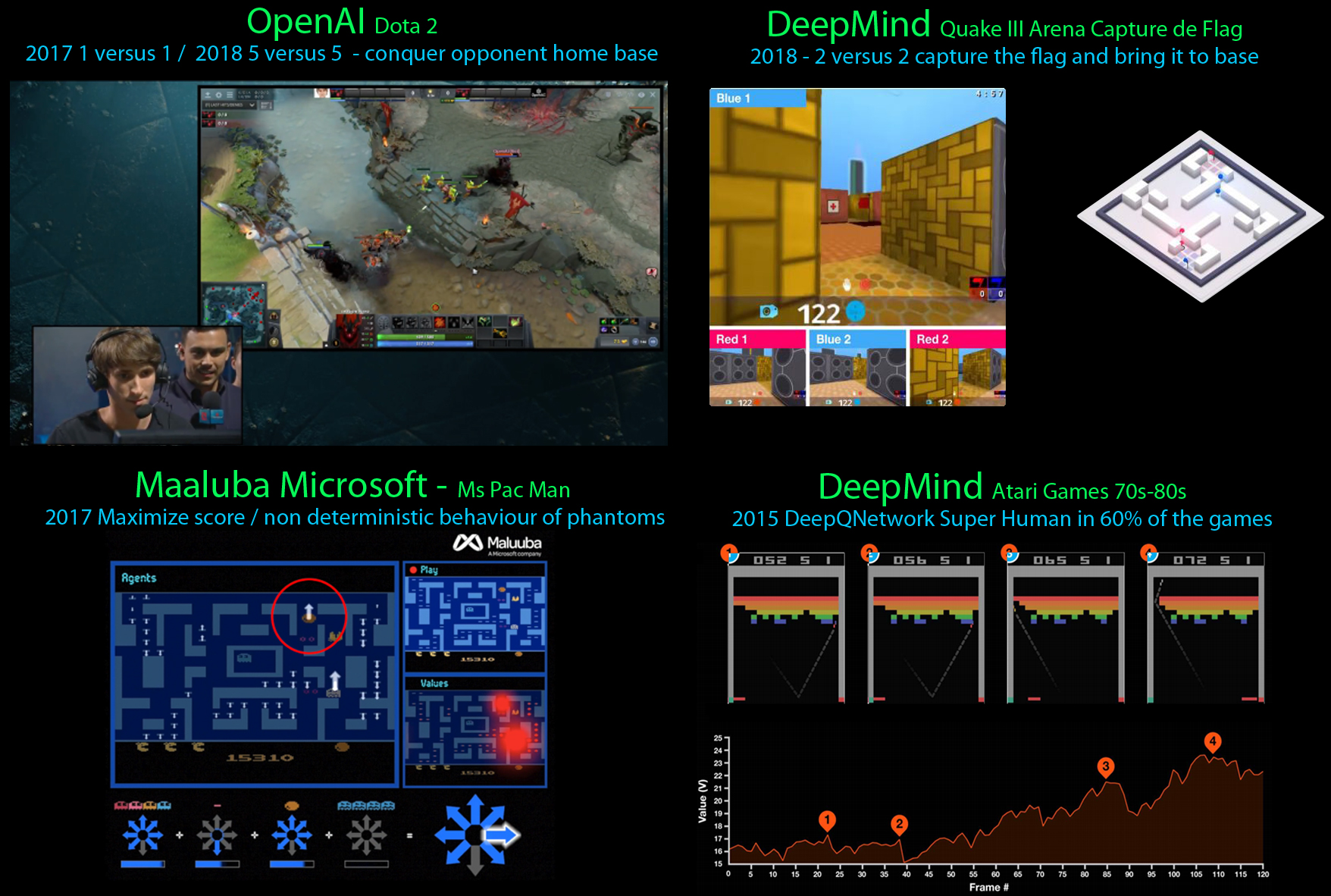

OpenAI is preparing the program that will control up to 5 agents against 5 human professional players in the complex game Dota2, a game in which the objective is to destroy and conquer the opponent team’s castle (link). OpenAI bot has already proven superiority against human players on the 1 versus 1 Dota2 with restricted play (link). The addressed multiplayer scenario is non-trivial and this is because when learning from inputs that include other’s agent behaviours we cannot guarantee the convergence of learning algorithms having to deal with non-stationary environments.

Deep Mind has also addressed the multiplayer game in a recently published a paper (link) in which a program reaches superhuman level play in the game “Quake III Arena Capture the Flag” a 2 vs. 2 scenario in which teams have to capture the opponent’s flag and bring it back to the base.

It is amazing to see how in the 90s a Deep Learning Neural Network was used to learn Backgammon using Reinforcement Learning TD-Gammon (link). 25 years after, classic Atari games from the 80s were addressed with success by Google Deep Mind with its Deep Reinforcement Learning (DQN) algorithm achieving human and superhuman performance in 60% of the game set (link). In 15% of the games, the algorithm DQN had serious difficulties mainly because of the involvement of long-term planning, like in the game Montezuma Revenge where DQN achieved 0 points. The main reason for this failure is that distant rewards cannot be achieved by the initial random exploration of Reinforcement Learning algorithms. Recently OpenAI published very good results achieved in Montezuma by learning from human play (see link).

Curiously, the non-deterministic version of PacMan (Atari Ms Pac Man, the one in which phantoms take a random action when close to bifurcations) has only been solved recently by an approach that decomposes the reward function into different components (see link).

Deep Mind also has proven superiority of a program, Alpha Go and Alpha Go Zero (learning from scratch without using human gameplay, see link) in the ancient game of GO. Alpha Go Zero also learned to play chess better than humans. It’s interesting to analyze how Alpha Go Zero uses different openings during the course of learning, proving that sometimes the human culture assigns value to openings in a human biased way.

The scientific achievements of these algorithms are in debate (for a discussion in the field of GO see link) and we still need to know the real contributions that they can bring to society. One of the main reasons of this debate is that the computations needed to achieve them increase exponentially (see the debate in OpenAI article link).

For a historical review see the Wikipedia page link and the book Artificial Intelligence and Games (2018, link).